Over the last few months, you've seen me drop vague hints about the work I'm doing at MSDN. Well, today we launched it at a TechEd chalk talk (DEVTLC03), so I can finally talk about it: the Microsoft/TechNet Publishing System (MTPS) Content Services.

In brief, the MTPS Content Services are a set of web services for exposing the content in MTPS. MTPS is the application I helped write a few years back that stores and processes all the content at

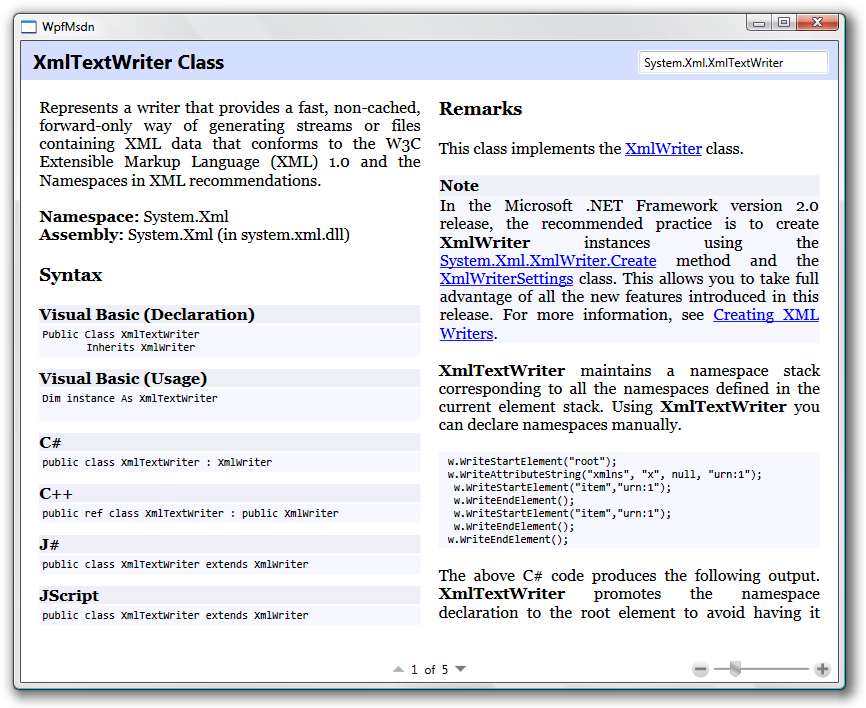

MSDN2. With the web service, you now have programmatic access to all that data via SOAP. So if you want to embed access to the documentation for System.Xml.XmlTextReader into your application, go for it. If you want to know what the child nodes of System.DateTime.ToString() are in the table of contents, you can go and find that, too. I expect to see some fairly interesting uses of the service pop up in the near future. There's such a huge amount of good information in MTPS that I imagine lots of people will want to leverage it.

The web service is reasonably well-documented

here (of course, I wrote the web service

and the documentation, so maybe I'm not the best person to judge the quality of the docs), but let me give a brief explanation of how it works.

The web service consists of two operations: GetContent and GetNavigationPaths. GetContent - as you might imagine - allows you to retrieve content (XHTML, GIFs, etc.) from MTPS. GetNavigationPaths lets you get the table of contents (TOC) data for the items in the system. I imagine most people will use GetContent far more often than GetNavigationPaths.

The system is organized around the concept of a content item. A content item is a collection of documents identified collectively by a content key. A document has a type, a format, and some content. The document most people will probably be interested in is the document of type primary, format Mtps.Xhtml, but there are other documents associated with a content item as well (for example, images can be stored in the content item as well). See the docs for more detail.

A content key consists of three parts: a content identifier, a locale, and a version. The locale is something like en-us (US English) or de-de (German as they speak it in Germany). The version is something like SQL.90 (SQL Server 2005).

The content identifier is a bit more complicated. It can be one of five things:

- A short ID. This is an eight-character identifier like "ms123401".

- A content alias. This is a "friendly name" for the content item, like "System.Xml.XmlTextReader".

- A content GUID. Topics can also be identified by a GUID.

- A content URL. To allow for easy integration with the HTML front end of MTPS URLs like http://msdn2.microsoft.com/en-us/library/b8a5e1s5(VS.80).aspx can also be used to identify a content item.

- An asset ID. This is how topics are identified internally by the system, and they occasionally appear in the output. They always begin with "AssetId:".

With the exception of asset IDs, these are all the same pieces that you can already use in the URLs for MSDN2, so the concepts should be familiar if you've spent any time looking at that stuff.

There are two slightly funky (but highly intentional) things about what GetContent returns that you'll need to keep in mind. The first is that, by default, the body of the documents that make up a content are not returned. Unless you list a document in the requestedDocuments section of the request message, you'll just get the types and formats of the available documents. This is because documents can be quite large, and it would be a waste to transmit all of them every time.

The other thing to be aware of is the idea of available versions and locales. If you send in a request for content item ms123401, locale en-us, version MSDN.10, you'll get back that content item, but you'll also receive a list that will tell you that the content item is also available for locale/version fr-fr/MSDN.10 and locale/version en-us/MSDN.20. This list is particularly valuable when the content key you request does not correspond to a known content item - in that case it represents the best guess by the MTPS system for reasonable alternatives.

GetNavigationPaths has a few twists as well. First, there's the name. We seriously considered calling it GetToc, but it's not exactly TOC data, since it's used for other things, like that little trail of links (sometimes called "breadcrumbs" or the "eyebrow") at the top of MSDN2 pages. What it really returns is all the ways to navigate between two content items. Hence, GetNavigationPaths.

GetNavigationPaths accepts two content keys. In this case, the identifier in the keys must be a short ID. (If you need to, you can resolve a short ID from an alias, a GUID, a URL or an asset ID via a call to GetContent first.) The first key identifies the root, which is the content item you'd like to start at, and second key identifies the target, which is the content item you'd like to wind up on.

What you get back is a list of navigation paths between the root and the target. There might be more than one path, because a content item can appear in more than one place in the TOC. A navigation path is a list of navigation nodes, where each navigation node is made up of a title, a navigation node key, and a content node key. There's also some information about something called phantoms, but I'll defer that to the docs.

The title is fairly self-explanatory, but the distinction between a navigation node key and a content node key is somewhat less intuitive…I had to have it explained to me more than a few times when I was writing the system. Basically, it arises out of the fact that every node in the TOC is itself a separate content item in the system, whose content consists of a reference to the content item that TOC node represents and a list of child nodes. So the navigation node key is a content key (identifier plus version plus locale) that represents the TOC node itself, and the content node key identifies the content item the TOC node corresponds to. You can tell the difference between the two because the content item identified by the navigation node key will always have a primary document of format "Mtps.Toc".

Another way to look at it is that the navigation node key tells you where you are in the left hand tree of MSDN2, and the content node key tells you what goes in the right hand content pane.

Like I said, I don't expect as many people to use GetNavigationPaths as to use GetContent, so I wouldn't lose too much sleep over the details. Of course, if you do wind up using it (or any part of the system), I'd love to hear about it, or about how we could make it or the documentation better.

This was a very interesting system to write for a variety of reasons, but I think I'll save the "how" for another post. I've also got what I think is a pretty cool application of the service that (at least some) people are really going to like. More on that later, too.

We consider the system to be roughly in beta, as we already know several things we need to improve or change. That said, we feel good enough about it to turn the world loose on it. If you come up with any cool ideas about how to use the service, or ideas about how we could improve it, drop a comment here.